The Background Story

A year ago, or so, I took some time to search the internet for Free Software that can be used for controlling model railways via a computer. I was happy to find Rocrail [1] being one of only a few applications available on the market. And even more, I was very happy when I saw that it had been licensed under a Free Software license: GPL-3(+).

A month ago, or so, I collected my old M rklin (Digital) stuff from my parents' place and started looking into it again after +15 years, together with my little son.

Some weeks ago, I remembered Rocrail and thought... Hey, this software was GPLed code and absolutely suitable for uploading to Debian and/or Ubuntu. I searched for the Rocrail source code and figured out that it got hidden from the web some time in 2015 and that the license obviously has been changed to some non-free license (I could not figure out what license, though).

This made me very sad! I thought I had found a piece of software that might be interesting for testing with my model railway. Whenever I stumble over some nice piece of Free Software that I plan to use (or even only play with), I upload this to Debian as one of the first steps. However, I highly attempt to stay away from non-free sofware, so Rocrail has become a no-option for me back in 2015.

I should have moved on from here on...

Instead...

Proactively, I signed up with the Rocrail forum and asked the author(s) if they see any chance of re-licensing the Rocrail code under GPL (or any other FLOSS license) again [2]? When I encounter situations like this, I normally offer my expertise and help with such licensing stuff for free. My impression until here already was that something strange must have happened in the past, so that software developers choose GPL and later on stepped back from that decision and from then on have been hiding the source code from the web entirely.

Going deeper...

The Rocrail project's wiki states that anyone can request GitBlit access via the forum and obtain the source code via Git for local build purposes only. Nice! So, I asked for access to the project's Git repository, which I had been granted. Thanks for that.

Trivial Source Code Investigation...

So far so good. I investigated the source code (well, only the license meta stuff shipped with the source code...) and found that the main COPYING files (found at various locations in the source tree, containing a full version of the GPL-3 license) had been replaced by this text:

Copyright (c) 2002 Robert Jan Versluis, Rocrail.net

All rights reserved.

Commercial usage needs permission.

The replacement happened with these Git commits:

commit cfee35f3ae5973e97a3d4b178f20eb69a916203e

Author: Rob Versluis <r.j.versluis@rocrail.net>

Date: Fri Jul 17 16:09:45 2015 +0200

update copyrights

commit df399d9d4be05799d4ae27984746c8b600adb20b

Author: Rob Versluis <r.j.versluis@rocrail.net>

Date: Wed Jul 8 14:49:12 2015 +0200

update licence

commit 0daffa4b8d3dc13df95ef47e0bdd52e1c2c58443

Author: Rob Versluis <r.j.versluis@rocrail.net>

Date: Wed Jul 8 10:17:13 2015 +0200

update

Getting in touch again, still being really interested and wanting to help...

As I consider such a non-license as really dangerous when distributing any sort of software, be it Free or non-free Software, I posted the below text on the Rocrail forum:

Hi Rob,

I just stumbled over this post [3] [link reference adapted for this

blog post), which probably is the one you have referred to above.

It seems that Rocrail contains features that require a key or such

for permanent activation. Basically, this is allowed and possible

even with the GPL-3+ (although Free Software activists will not

appreciate that). As the GPL states that people can share the source

code, programmers can easily deactivate license key checks (and

such) in the code and re-distribute that patchset as they like.

Furthermore, the current COPYING file is really non-protective at

all. It does not really protect you as copyright holder of the

code. Meaning, if people crash their trains with your software, you

could actually be legally prosecuted for that. In theory. Or in the

U.S. ( ;-) ). Main reason for having a long long license text is to

protect you as the author in case your software causes t trouble to

other people. You do not have any warranty disclaimer in your COPYING

file or elsewhere. Really not a good idea.

In that referenced post above, someone also writes about the nuisance

of license discussions in this forum. I have seen various cases

where people produced software and did not really care for

licensing. Some ended with a letter from a lawyer, some with some BIG

company using their code under their copyright holdership and their

own commercial licensing scheme. This is not paranoia, this is what

happens in the Free Software world from time to time.

A model that might be much more appropriate (and more protective to

you as the author), maybe, is a dual release scheme for the code. A

possible approach could be to split Rocrail into two editions:

Community Edition and Professional/Commercial Edition. The Community

Edition must be licensed in a way that it allows re-using the code

in a closed-source, non-free version of Rocrail (e.g. MIT/Expat

License or Apache2.0 License). Thus, the code base belonging to the

community edition would be licensed, say..., as Apache-2.0 and for

the extra features in the Commercial Edition, you may use any

non-free license you want (but please not that COPYING file you have

now, it really does not protect your copyright holdership).

The reason for releasing (a reduced set of features of a) software as

Free Software is to extend the user base. The honey jar effect, as

practise by many huge FLOSS projects (e.g. Owncloud, GitLab, etc.).

If people could install Rocrail from the Debian / Ubuntu archives

directly, I am sure that the user base of Rocrail will increase.

There may also be developers popping up showing an interest in

Rocrail (e.g. like me). However, I know many FLOSS developers (e.g.

like me) that won't waste their free time on working for a non-free

piece of software (without being paid).

If you follow (or want to follow) a business model with Rocrail, then

keep some interesting features in the Commercial Edition and don't

ship that source code. People with deep interest may opt for that.

Furthermore, another option could be dual licensing the code. As the

copyright holder of Rocrail you are free to juggle with licenses and

apply any license to a release you want. For example, this can be

interesing for a free-again Rocrail being shipped via Apple's iStore.

Last but not least, as you ship the complete source code with all

previous changes as a Git project to those who request GitBlit

access, it is possible to obtain all earlier versions of Rocrail. In

the mail I received with my GitBlit credentials, there was some text

that prohibits publishing the code. Fine. But: (in theory) it is

not forbidden to share the code with a friend, for local usage. This

friend finds the COPYING file, frowns and rewinds back to 2015 where

the license was still GPL-3+. GPL-3+ code can be shared with anyone

and also published, so this friend could upload the 2015-version of

Rocrail to Github or such and start to work on a free fork. You also

may not want this.

Thanks for working on this piece of software! It is highly

interesing, and I am still sad, that it does not come with a free

license anymore. I won't continue this discussion and move on, unless

you are interested in any of the above information and ask for more

expertise. Ping me here or directly via mail, if needed. If the

expertise leads to parts of Rocrail becoming Free Software again, the

expertise is offered free of charge ;-).

light+love

Mike

Wow, the first time I got moderated somewhere... What an experience!

This experience now was really new. My post got immediately removed from the forum by the main author of Rocrail (with the forum's moderator's hat on). The new experience was: I got really angry when I discovererd having been moderated. Wow! Really a powerful emotion. No harassment in my words, no secrets disclosed, and still... my free speech got suppressed by someone. That feels intense! And it only occurred in the virtual realm, not face to face. Wow!!! I did not expect such intensity...

The reason for wiping my post without any other communication was given as below and quite a statement to frown upon (this post has also been "moderately" removed from the forum thread [2] a bit later today):

Mike,

I think its not a good idea to point out a way to get the sources back to the GPL periode.

Therefore I deleted your posting.

(The phpBB forum software also allows moderators to edit posts, so the critical passage could have been removed instead, but immediately wiping the full message, well...). Also, just wiping my post and not replying otherwise with some apology to suppress my words, really is a no-go. And the reason for wiping the rest of the text... Any Git user can easily figure out how to get a FLOSS version of Rocrail and continue to work on that from then on. Really.

Now the political part of this blog post...

Fortunately, I still live in an area of the world where the right of free speech is still present. I found out: I really don't like being moderated!!! Esp. if what I share / propose is really noooo secret at all. Anyone who knows how to use Git can come to the same conclusion as I have come to this morning.

[Off-topic, not at all related to Rocrail: The last votes here in Germany indicate that some really stupid folks here yearn for another this time highly idiotic wind of change, where free speech may end up as a precious good.]

To other (Debian) Package Maintainers and Railroad Enthusiasts...

With this blog post I probably close the last option for Rocrail going FLOSS again. Personally, I think that gate was already closed before I got in touch.

Now really moving on...

Probably the best approach for my new train conductor hobby (as already recommended by the woman at my side some weeks back) is to leave the laptop lid closed when switching on the train control units. I should have listened to her much earlier.

I have finally removed the Rocrail source code from my computer again without building and testing the application. I neither have shared the source code with anyone. Neither have I shared the Git URL with anyone. I really think that FLOSS enthusiasts should stay away from this software for now. For my part, I have lost my interest in this completely...

References

light+love,

Mike

As we are moving closer to the Debian release freeze, I am shipping out a new set of packages. Nothing spectacular here, just the regular updates and a security fix that was only reported internally. Add sugar and a few minor bug fixes.

As we are moving closer to the Debian release freeze, I am shipping out a new set of packages. Nothing spectacular here, just the regular updates and a security fix that was only reported internally. Add sugar and a few minor bug fixes. I have been silent for quite some time, busy at my new job, busy with my little monster, writing papers, caring for visitors, living. I have quite a lot of things I want to write, but not enough time, so very short only this one.

Enjoy.

New packages

I have been silent for quite some time, busy at my new job, busy with my little monster, writing papers, caring for visitors, living. I have quite a lot of things I want to write, but not enough time, so very short only this one.

Enjoy.

New packages

Finally also a word about removals: Several ConTeXt packages have been removed due to the fact that they are outdated. These removals will find their way in an update of the Debian ConTeXt package in near future. The TeX Live packages lost

Finally also a word about removals: Several ConTeXt packages have been removed due to the fact that they are outdated. These removals will find their way in an update of the Debian ConTeXt package in near future. The TeX Live packages lost

My plan for

My plan for  Forgive me, reader, for I have sinned. It has been over a year since my last blog post. Life got busy. Paid work.

Forgive me, reader, for I have sinned. It has been over a year since my last blog post. Life got busy. Paid work.

OpenStack Newton is released, and uploaded to Sid

OpenStack Newton was released on the Thursday 6th of October. I was able to upload nearly all of it before the week-end, though there was a bit of hick-ups still, as I forgot to upload python-fixtures 3.0.0 to unstable, and only realized it thanks to some bug reports. As this is a build time dependency, it didn t disrupt Sid users too much, but 38 packages wouldn t build without it. Thanks to Santiago Vila for pointing at the issue here.

As of writing, a lot of the Newton packages didn t migrate to Testing yet. It s been migrating in a very messy way. I d love to improve this process, but I m not sure how, if not filling RC bugs against 250 packages (which would be painful to do), so they would migrate at once. Suggestions welcome.

Bye bye Jenkins

For a few years, I was using Jenkins, together with a post-receive hook to build Debian Stable backports of OpenStack packages. Though nearly a year and a half ago, we had that project to build the packages within the OpenStack infrastructure, and use the CI/CD like OpenStack upstream was doing. This is done, and Jenkins is gone, as of OpenStack Newton.

Current status

As of August, almost all of the packages Git repositories were uploaded to OpenStack Gerrit, and the build now happens in OpenStack infrastructure. We ve been able to build all packages a release OpenStack Newton Debian packages using this system. This non-official jessie backports repository has also been validated using Tempest.

Goodies from Gerrit and upstream CI/CD

It is very nice to have it built this way, so we will be able to maintain a full CI/CD in upstream infrastructure using Newton for the life of Stretch, which means we will have the tools to test security patches virtually forever. Another thing is that now, anyone can propose packaging patches without the need for an Alioth account, by sending a patch for review through Gerrit. It is our hope that this will increase the likeliness of external contribution, for example from 3rd party plugins vendors (ie: networking driver vendors, for example), or upstream contributors themselves. They are already used to Gerrit, and they all expected the packaging to work this way. They are all very much welcome.

The upstream infra: nodepool, zuul and friends

OpenStack Newton is released, and uploaded to Sid

OpenStack Newton was released on the Thursday 6th of October. I was able to upload nearly all of it before the week-end, though there was a bit of hick-ups still, as I forgot to upload python-fixtures 3.0.0 to unstable, and only realized it thanks to some bug reports. As this is a build time dependency, it didn t disrupt Sid users too much, but 38 packages wouldn t build without it. Thanks to Santiago Vila for pointing at the issue here.

As of writing, a lot of the Newton packages didn t migrate to Testing yet. It s been migrating in a very messy way. I d love to improve this process, but I m not sure how, if not filling RC bugs against 250 packages (which would be painful to do), so they would migrate at once. Suggestions welcome.

Bye bye Jenkins

For a few years, I was using Jenkins, together with a post-receive hook to build Debian Stable backports of OpenStack packages. Though nearly a year and a half ago, we had that project to build the packages within the OpenStack infrastructure, and use the CI/CD like OpenStack upstream was doing. This is done, and Jenkins is gone, as of OpenStack Newton.

Current status

As of August, almost all of the packages Git repositories were uploaded to OpenStack Gerrit, and the build now happens in OpenStack infrastructure. We ve been able to build all packages a release OpenStack Newton Debian packages using this system. This non-official jessie backports repository has also been validated using Tempest.

Goodies from Gerrit and upstream CI/CD

It is very nice to have it built this way, so we will be able to maintain a full CI/CD in upstream infrastructure using Newton for the life of Stretch, which means we will have the tools to test security patches virtually forever. Another thing is that now, anyone can propose packaging patches without the need for an Alioth account, by sending a patch for review through Gerrit. It is our hope that this will increase the likeliness of external contribution, for example from 3rd party plugins vendors (ie: networking driver vendors, for example), or upstream contributors themselves. They are already used to Gerrit, and they all expected the packaging to work this way. They are all very much welcome.

The upstream infra: nodepool, zuul and friends

At the moment, it contains

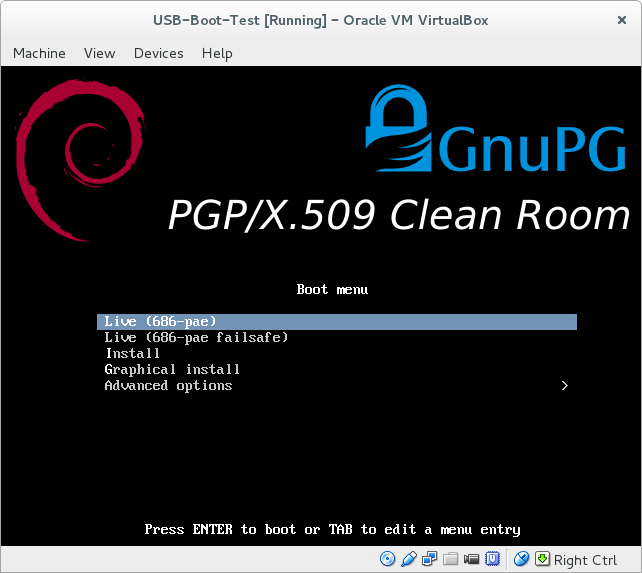

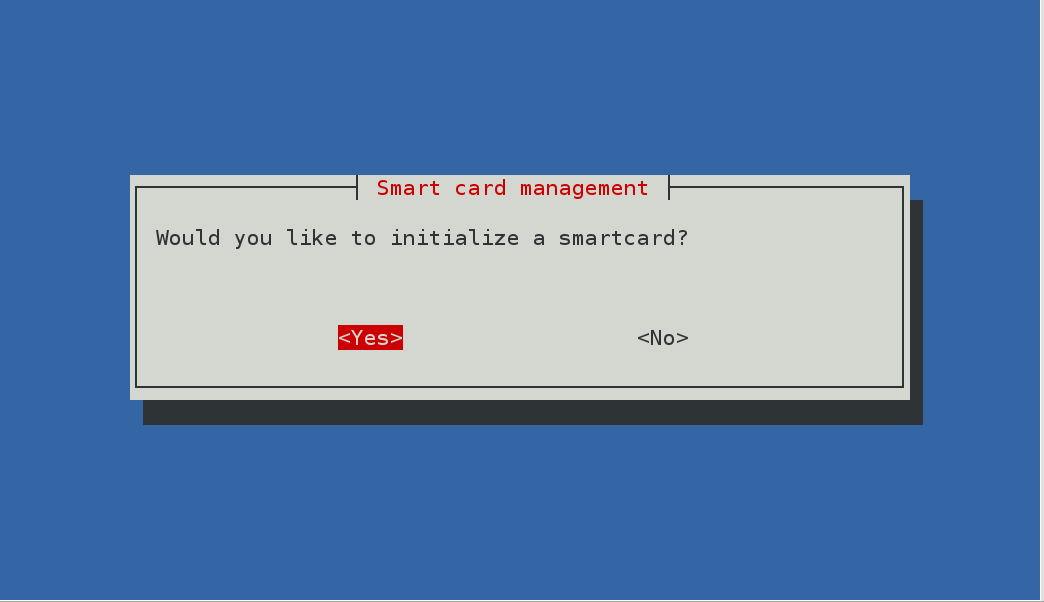

At the moment, it contains  Ready to use today

More confident users will be able to build the ISO and use it immediately by operating all the utilities from the command line. For example, you should be able to

Ready to use today

More confident users will be able to build the ISO and use it immediately by operating all the utilities from the command line. For example, you should be able to

When I gave my

When I gave my

My experiences with Amazon reviewing have been somewhat unusual. A review of a

My experiences with Amazon reviewing have been somewhat unusual. A review of a  Concerning the binaries we do expect a few further changes, but hopefully nothing drastic. The most invasive change on the tlmgr side is that cryptographic signatures are now verified to guarantee authenticity of the packages downloaded, but this is rather irrelevant for Debian users (though I will look into how that works in user mode).

Other than that, many packages have been updated or added since the last Debian packages, here is the unified list:

Concerning the binaries we do expect a few further changes, but hopefully nothing drastic. The most invasive change on the tlmgr side is that cryptographic signatures are now verified to guarantee authenticity of the packages downloaded, but this is rather irrelevant for Debian users (though I will look into how that works in user mode).

Other than that, many packages have been updated or added since the last Debian packages, here is the unified list: